Overview

uOttaChat is an LLM-powered service originally built for my university's hackathon, uOttaHack. I developed it from scratch—from setting up the infrastructure and building the API service layer to refining it through prompt engineering. I later open-sourced it so that any hackathon in the MLH community can use it to build their own implementation.

Background

I was the Co-Director when I helped relaunch and scale uOttaHack a few years ago—I built an amazing team of 18 students, raised over $175K within three years, and built a community of over 4,000 students excited about our events. If you’re curious, I wrote a small article below! Nowadays, as a senior in college, I'm an advisor to the team and help out wherever I can, such as with fun tools like uOttaChat :)

This project began because we recognized a key pain-point in organizing hackathons: getting accurate, timely information to participants. Not every volunteer or organizer knows every detail about an event—and that’s not expected. In the past, we relied on participant guides, dedicated support channels, and FAQs, but this approach felt static and left much to be desired in terms of user experience. uOttaChat was created as a dynamic way of delivering information faster and more accurately, serving as the first line of support by allowing participants to ask specific questions about the event.

Participants can ask about:

- Technical challenges & details about our sponsors

- Real-time event schedule information

- Logistics (food, deadlines, venue floor plans, etc.)

- Key resources (links to our Discord, submission platform, FAQ, etc.)

- And anything else related to the event!

We've integrated uOttaChat into both our live-site (live.uottahack.ca) and our Discord server, allowing participants to quickly access information through multiple platforms.

Tech Stack

- Backend: Python, FastAPI

- LLM Provider: Cohere (chat model)

- Hosting: AWS EC2

- Frontend Integration: React

- Discord Bot: Discord API

- Prompt Engineering: Custom chaining, reflection step, and dynamic context injection

System Design Diagram

Demo

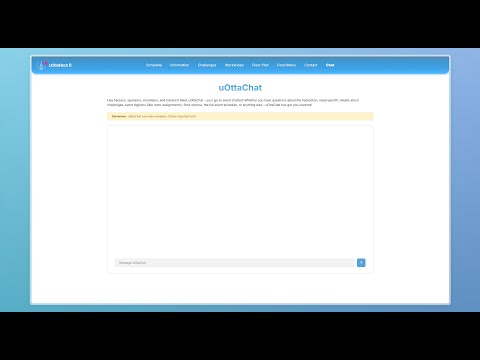

Access via Live-Site

💬 Participants can chat with uOttaChat directly on the live-site (2025 event chat page):

🔗 Live-Site URL: live.uottahack.ca

Prompt Engineering & Customization

To improve response accuracy, we implemented:

🔹 Custom Chained Prompts: Reflects on previous answers before generating a new response.

🔹 Reference Data Injection: We dynamically fetch structured event data to enhance responses.

🔹 Context Retention: Chat history is stored using session handling.

Reference Data Integration

def fetch_reference_data(): """Fetch reference data from REF_DATA_API_URL.""" try: response = requests.get(REF_DATA_API_URL, timeout=5) response.raise_for_status() return response.text except requests.RequestException as e: print("Error fetching reference data:", e) raise HTTPException(status_code=500, detail="Error fetching reference data")

Two-Step Prompting

1. Initial Response:

{ "messages": [ { "role": "system", "content": "You are a chatbot for uOttaHack 7, a student hackathon at the University of Ottawa. Your job is to assist participants with event-related questions using only the provided reference data." }, { "role": "user", "content": user_message } ], "documents": [reference_data] }

2. Reflection Step:

{ "messages": [ # Previous context { "role": "system", "content": "Please reflect on the previous answer and provide any improvements or insights. Make sure your answers are fact-based on the document of truths about uOttaHack." } ], "documents": [reference_data] }

Discord Bot Integration

The service is also connected to a Discord bot, allowing participants to inference via chat

🔹 Uses the Discord API to process messages.

Example:

@client.event async def on_message(message): if message.author == client.user: return if client.user.mentioned_in(message): query = message.content.replace(f"<@{client.user.id}>", "").strip() reference_data = fetch_reference_file() response = get_ai_response(query, reference_data) await message.channel.send(response)

Open-Sourcing

The following components are available in the GitHub repo below:

- UI Component – Frontend code and integration examples

- API Backend Code – Python/FastAPI service for handling requests

- Example Live Dataset – Sample data and reference files for testing

- Discord Bot Configuration – Deployment and integration scripts

- NGINX & SSL Support: Production-ready deployment scripts & staging endpoint

All resources are available on the uOttaHack org page and specific repo (see link below). We encourage the community to contribute and expand on our code—whether by adding new features, improving performance, or tailoring the chatbot to different event needs. We welcome any and all contributions—there's a README explaining more about how you can contribute!